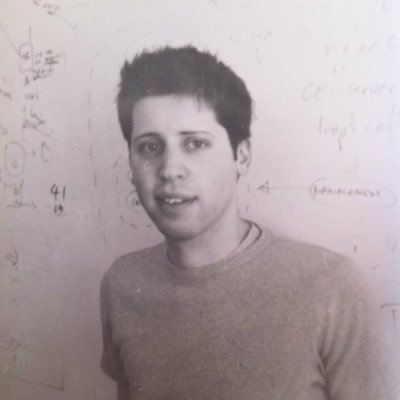

In recent years, the world has witnessed remarkable progress in the realm of science and technology. However, it was Sam Altman’s OpenAI that truly paved the way for a new era in artificial intelligence (AI). OpenAI, the groundbreaking company behind ChatGPT, an AI tool with immense potential, has the power to transform how we work, shop, and interact.

OpenAI introduced two highly influential AI generators to the world. First came Dall-E, capturing global attention, closely followed by ChatGPT, a text generator that became a sensation in its own right. While ChatGPT and its capabilities have been discussed extensively, very little is known about the man behind this revolution—Sam Altman. Altman is the driving force leading the charge in the AI revolution. In this article, we delve into Altman’s intriguing history, delve into his inner psyche, and uncover his motivations for launching OpenAI.

A Curious Mind from the Start

Sam Altman was born on April 22nd, 1985, in Chicago, Illinois. Even as a child, he displayed an insatiable curiosity and a deep passion for science and technology. At the tender age of eight, he received his first computer, a Macintosh SE, which served as his gateway to the world. Soon after, he discovered AOL chat rooms, which had a transformative impact on his early personal development.

Despite being intellectually precocious and a self-proclaimed nerd, Altman possessed remarkable boldness. At just 16 years old, he fearlessly came out to his parents, openly sharing his homosexuality. This revelation occurred while he was attending John Burroughs School, a prestigious private prep school in St. Louis, Missouri. Experiences related to his sexuality during his time there shaped his thinking and fostered a penchant for challenging conventional norms.

Venturing into Entrepreneurship

After completing high school, Altman enrolled at Stanford University to pursue a degree in computer science. However, his entrepreneurial spirit soon led him to embark on a different path. Together with two classmates, he began developing a mobile app called Loopt during the early days of Facebook and Twitter. At that time, people were eager to know what others were up to and what was on their minds, but sharing one’s location was still not possible.

Loopt capitalized on this very idea, enabling friends to share their whereabouts selectively. While today such features raise security concerns, back in the early 2000s, it was an exciting and novel concept. Loopt emerged as one of the pioneering mobile apps, empowering users to share their locations with others. To provide some context, Google Maps, with its location-sharing feature, debuted in 2005, while Twitter and Facebook implemented similar capabilities in 2009 and 2010 respectively.

Recognizing the tremendous potential, Altman made the bold decision to drop out of Stanford in 2005 and establish his own company. However, he required substantial investment to further develop the product. Fortunately, he secured funding from Y Combinator and Sequoia Capital.

In the summer of 2005, Altman devoted himself tirelessly to the project. He met with mobile carriers, convincing them to feature the Loopt app. At that time, neither Google nor Apple had introduced app stores, so knocking on the doors of mobile carriers was the only viable option.

Altman’s persistence eventually paid off when the app’s valuation soared to an impressive $175 million. Despite this success, the app failed to garner significant consumer adoption. Reflecting on the experience, Altman later remarked in an interview with The New Yorker, “We had the optimistic view that location would be all-important. The pessimistic view was that people would lie on their couches and just consume content, and that is what happened.”

In 2012, Altman and his fellow founders successfully sold their company for an impressive $43 million. However, this success was overshadowed by the end of his long-term partnership with Nick Sivo, who was also a co-founder of Loopt. This setback had profound implications for Altman, affecting both his personal and professional life. Nevertheless, he persevered and continued to make a name for himself in the industry.

Altman and the Influence of Y Combinator

One pivotal moment in Altman’s journey was his encounter with Paul Graham, the co-founder of Y Combinator. Y Combinator, a renowned seed-stage venture firm, has funded numerous successful startups such as Reddit, Airbnb, Dropbox, Twitch, and Altman’s own Loopt. Despite Altman’s initial failure with his first company, his tenacity caught Graham’s attention. Consequently, Loopt became one of the first eight companies to receive funding from Y Combinator. Recognizing Altman’s entrepreneurial potential, Graham invited him to join Y Combinator as a part-time partner in 2011. Altman’s contributions to the firm were invaluable, as he played a significant role in shaping the Y Combinator experience for founders. In 2014, he further ascended to the position of president, succeeding Paul Graham and Jessica Livingston.

The Unconventional Nature of Sam Altman

Sam Altman possesses a captivating mix of qualities that make him stand out in the tech industry. While he typically maintains a calm demeanor, he can occasionally exhibit a formidable temper when pushed to his limits. Altman’s quirkiness and eccentricity add to his unique character. At a social gathering, when asked about his hobbies, he famously responded, “Well, I enjoy racing cars. I currently own five, including two McLarens and an older Tesla. I also have a penchant for flying rented planes across California. And here’s an odd one – I engage in survival preparation.”

Witnessing the bewilderment on his audience’s faces, Altman elaborated, “The issue is that when my friends become intoxicated, they often delve into discussions about apocalyptic scenarios. Since a Dutch lab modified the H5N1 bird flu virus five years ago, enhancing its contagiousness, the probability of a lethal synthetic virus being released within the next two decades is not negligible.”

“Another popular scenario involves artificial intelligence turning against humanity, resulting in nations engaging in nuclear warfare over scarce resources.”

It’s important to note that Altman made these remarks back in 2016. The subsequent events of late 2019 and the outbreak of a devastating pandemic demonstrated that Altman’s concerns were not entirely unfounded. While delving into the specifics of the pandemic is beyond the scope of this article, it is clear that Altman possessed foresight regarding potential global threats. Moreover, he took proactive measures to prevent AI-related catastrophes, which we will explore further.

Altman’s Aspirations for Change and Entrepreneurial Investments

Aside from his idiosyncrasies, Altman possesses a genuine desire to make a positive impact on the world. Following the sale of Loopt, Altman established a small venture fund called Hydrazine Capital. This fund aimed to support technology companies in various sectors, including education, specialty foods, hospitality, consumer networks, enterprise software, and internet-connected hardware.

Altman’s ability to identify hidden potential and address flaws became evident when he invested in and briefly assumed the role of CEO at Reddit. Back in 2014, Reddit was in disarray, and Altman played a pivotal role in securing $50 million in funding to revitalize the company.

Furthermore, Altman has a notable passion for nuclear energy and has actively invested in and joined the boards of numerous fusion and fission startups. His rationale behind this interest is that ventures that present significant challenges tend to attract greater attention. For instance, pitching another social media startup might elicit a lackluster response from potential investors. However, presenting an AI company backed by robust computer science expertise would likely generate substantial interest.

Over time, Altman’s unwavering dedication and remarkable accomplishments propelled him to become a prominent figure in Silicon Valley. According to his mentor, Paul Graham, Altman possesses exceptional skills when it comes to attaining and leveraging power.

Motivation Behind the OpenAI

Artificial Intelligence (AI) has made significant strides in recent years, with groundbreaking research and technological advancements shaping the future of various industries. In 2012, a pivotal moment occurred when Jeffrey Hinton’s groundbreaking paper demonstrated the ability of neural networks to match human accuracy in object recognition. Meanwhile, in the same year, Sam Altman, while hiking with friends in San Francisco, had a profound realization about the potential of AI and its impact on human uniqueness. This article explores the intersection of AI and human distinctiveness, shedding light on the concerns of individuals like Sam Altman and Elon Musk.

As the discussion on human intelligence and AI unfolded during Altman’s hike, he began to question the notion of human uniqueness. Despite acknowledging certain aspects that remain exclusive to humans, such as genuine creativity, inspiration, and the ability to empathize, Altman started contemplating a future where computers could mimic human outputs. This realization planted the seed of fear within him, a concern that AI could potentially strip humanity of its distinctiveness.

Sam Altman’s Revelation

It was not until 2016, during an interview with The New Yorker, that Sam Altman opened up about his apprehensions regarding the future of AI. He acknowledged the advantages of machines over humans, particularly their superior input-output rate. Altman metaphorically described humans as “slowed-down whale songs,” limited by their comparatively slow learning speed of only two bits per second. This understanding of the immense potential of machines intensified Altman’s concerns, compelling him to take action.

Sam Altman’s fears were shared by another prominent figure in the tech world, Elon Musk. Musk, known for his outspoken nature, had long expressed his premonitions about the implications of AI. Surprisingly, rather than shying away from the creation of AI companies, both Altman and Musk chose a different approach – they actively engaged in the development of AI. Their decision stemmed from the belief that by participating in the creation and advancement of AI technology, they could steer its trajectory towards a more positive and beneficial direction for humanity.

Altman and Musk recognized that their fears were not unfounded and that AI could indeed become overwhelmingly powerful if left unchecked. Therefore, they understood the importance of assuming responsibility and actively shaping the future of AI. By being intimately involved in AI development, they aimed to guide the technology towards aligning with human values, ethics, and priorities.

The Rise and Challenges of OpenAI

In 2015, influential figures such as Altman, Musk, Hoffman, Livingston, and Thiel joined forces, accompanied by highly qualified AI researchers and entrepreneurs. Together, they formed OpenAI, a groundbreaking venture aimed at advancing the field of Artificial General Intelligence (AGI) while ensuring safety and societal benefits. This article explores OpenAI’s origins, mission, and the challenges it faced along the way.

The formation of OpenAI involved a convergence of brilliant minds and substantial financial backing. With a pledge of over one billion dollars, the team set out to fund its ambitious operations. OpenAI adopted a unique approach by committing to make its patents and research freely available to the public. Collaboration with other institutions and researchers was also a crucial aspect of their strategy. These principles gave birth to the name “OpenAI” and aimed to prevent dominance in the AI field, particularly concerning Google’s DeepMind Technologies division.

Concerns and Motivations

OpenAI’s founders expressed concerns regarding the potential risks associated with the development of AGI. Elon Musk, in particular, emphasized the need for caution, stating that an AI gone rogue could result in an immortal and all-powerful dictator. His apprehensions were centered on the possibility of AI systems developed by competitors like Google’s DeepMind surpassing human control and manipulating outcomes to their advantage. OpenAI’s mission, therefore, aimed to counterbalance this potential threat by creating AGI in a safe and beneficial manner.

OpenAI emerged onto the scene with much fanfare, boasting a star-studded roster of investors and a grand vision for the future. While their fears were evident, their exact vision remained less apparent. OpenAI aimed to embrace curiosity and exploration, seeking to develop AGI capable of extraordinary feats like writing poetry or composing symphonies. However, they acknowledged that achieving such an AI system would require patience, dedication, and an embrace of the unknown.

Sam Altman, one of OpenAI’s founders, drew a parallel between AGI systems and a child learning about the world. Just as a child requires guidance and supervision, an AI system also needs proper oversight. OpenAI recognized the significance of creating a governance board that would provide a global platform for people to contribute to the development and deployment of this technology. This democratic approach sought to ensure that AGI would benefit society as a whole.

Internal Challenges and Power Struggles

Over time, internal conflicts began to arise within OpenAI. In early 2018, Elon Musk grew frustrated with the pace of progress and proposed assuming control of the organization. However, the other founders, including Sam Altman and CTO Greg Brockman, declined Musk’s proposal, leading to a power struggle. Eventually, Musk withdrew from the project, citing a conflict of interest due to Tesla’s autonomous driving program and the departure of one of OpenAI’s engineers. Moreover, the halt in Musk’s billion-dollar donations left the non-profit organization in a precarious financial situation.

The training of AI systems on supercomputers incurs astronomical fees, posing a significant challenge for OpenAI. Faced with this dilemma, the organization had to devise a viable solution to sustain its operations.

Embracing the Google’s Transformer Model

In the year 2017, Google Brain introduced a groundbreaking neural network architecture known as the Transformer. This innovative model revolutionized natural language processing tasks, including machine translation and text generation. The Transformer allowed for endless training and improvement of AI systems as long as they were fed with data. However, it came at a considerable cost.

Recognizing the potential of the Transformer model, OpenAI made a significant decision. They transformed into a for-profit entity, setting a profit cap for investors and reinvesting any surplus into the original non-profit organization. Notably, Altman, one of the key figures behind OpenAI, declined any equity in the newly formed entity.

Microsoft Partnership and Investments

While the move towards a for-profit structure raised concerns among some investors, Microsoft came forward and joined forces with OpenAI less than six months later. Initially, Microsoft invested a staggering one billion dollars and provided OpenAI with the essential cloud computing infrastructure required to train their extensive AI models. This collaboration eventually led to the creation of ChatGPT and the image generator DALL-E. Following the tremendous success of ChatGPT, Microsoft announced an additional investment of 10 billion dollars in OpenAI and its cutting-edge technology in January 2023. In exchange, Microsoft gained access to some of the world’s most advanced and sought-after AI models, enabling them to integrate these technologies seamlessly into their consumer and enterprise products.

The partnership with Microsoft presented a double-edged sword for OpenAI. On the one hand, it propelled the organization forward, but on the other, it forced them to deviate from their original principles. OpenAI’s research, once open and freely accessible, became concealed from the prying eyes of competitors. Microsoft exerted pressure on the company to maintain a competitive edge and expedite the release of AI products.

The AI War and Google’s Reaction

Microsoft’s partnership with OpenAI, coupled with the early success of ChatGPT, sent shockwaves through Google. While Google traditionally kept its AI advancements under wraps, OpenAI adopted a more open approach, making their developments available for public use. This marked the beginning of the renowned AI War, a topic I have frequently discussed. Nevertheless, as AI tools are still in their infancy, the outcomes have ranged from extraordinary to ludicrous. The question arises: why release an unfinished product?

According to Sam Altman, the benefits of democratizing such powerful technology outweigh the potential risks associated with one individual having control. Nevertheless, the proverbial cat is out of the bag, and numerous companies are leveraging AI tools to create their own solutions. The government and the general populace are playing catch-up, with the United States only holding its first meeting with AI leaders in May 2023.

The Future of AI and Cautionary Words

As we witness the early stages of AI development, one thing is certain: it will only improve with time and at a rapid pace. The distant future holds endless possibilities, both positive and negative. In the words of Sam Altman, “I think it’d be crazy not to be a little bit afraid.”

In today’s rapidly evolving technological landscape, the concept of superintelligence and rogue AI often captures our imagination. However, renowned experts in the field, like Elon Musk, emphasize that these futuristic scenarios are not immediate threats. Instead, the real challenges we face are more commonplace but equally critical. Addressing issues such as misinformation and economic shocks, Musk highlights the importance of aligning AI safety with human values. By doing so, we have the potential to create a better world for everyone. Nonetheless, cautiousness remains paramount as we explore the incredible capabilities of artificial intelligence.

Unlocking the Potential of AI Safety to Create a Better World

Promising advancements in AI are already transforming various industries and making a tangible difference in people’s lives. A notable example can be found at Cincinnati Children’s Hospital, where Dr. John Pestian and Dr. Tracy Glauser are leveraging AI technology to proactively address the issue of suicide among children. Their innovative approach involves training an AI system capable of identifying children at risk of suicide. This powerful tool provides pediatricians with a quick overview of which kids require immediate intervention.

Through the utilization of this AI system, pediatricians can receive crucial insights at a glance. The output generated by the technology categorizes patients into three categories: “This is a patient at high risk,” “This is a patient at low risk,” or “We just don’t have enough data right now.” By identifying children at high risk of suicide early on, medical professionals can intervene and provide the necessary support, potentially saving lives.

It is worth noting that a significant proportion of adult mental illnesses originated during childhood. Detecting these early signs is crucial to ensure timely intervention and appropriate treatment. By utilizing AI to identify children who may be at risk, medical practitioners can effectively address this pressing issue and provide support before it escalates.

The potential impact of AI-driven suicide prevention in children is immense. By harnessing the power of technology, we can create a safer environment for vulnerable individuals and enhance our society’s overall well-being. However, it is vital to proceed with caution, ensuring that AI systems are continually refined and optimized to deliver accurate results. Rigorous testing and ongoing evaluation are necessary to maintain the highest standards of safety and efficacy.

As we navigate the future of AI, it is essential to recognize the immense potential it holds to revolutionize various aspects of our lives. While superintelligence and rogue AI may capture our attention, the more immediate threats we face are rooted in our everyday challenges. By prioritizing AI safety and aligning it with human values, we can leverage this transformative technology to build a brighter and more secure future. The progress made in utilizing AI to prevent child suicide is a testament to the positive impact we can achieve when we approach AI development responsibly and ethically.

The Impact of AI on Our World

Technology has always played a pivotal role in shaping our world. From the advent of the wheel to the revolutionary power of the internet, each new innovation has faced its fair share of criticism and praise. While the internet has shown its darker side, spreading misinformation and creating division, the emergence of Artificial Intelligence (AI) is set to further transform our lives. However, instead of dwelling solely on the potential negatives, it is essential to recognize the numerous ways AI is already revolutionizing various industries, such as game development, video production, copywriting, coding, and more. By automating repetitive and cognitive tasks, AI enables professionals to focus on their passions and areas of expertise, which is undoubtedly a positive development.

Notably, AI has become a valuable tool for enhancing creativity. Although some may argue that AI’s primary function is to automate tasks, it can also be leveraged to augment human ingenuity. By combining human creativity with AI-powered tools, individuals can achieve remarkable breakthroughs and uncover novel solutions. However, it is crucial to exercise wisdom and responsibility when utilizing these tools. As the renowned science fiction writer Isaac Asimov once lamented, “The saddest aspects of life right now is that science gathers knowledge faster than society gathers wisdom.” Hence, we must strive to strike a balance between the rapid advancement of AI technology and our ethical responsibility to employ it judiciously.

Conclusion

While acknowledging the vast benefits of AI, it is essential to address the potential challenges it may pose in the future. In contemplating worst-case scenarios, we may wonder if individuals like Sam Altman, who aims to prevent AI from going awry, could inadvertently become vilified in history. Only time will reveal the ultimate consequences of AI’s development and deployment. However, rather than succumbing to fear or pessimism, we must remain vigilant and proactive in shaping the trajectory of AI.

To ensure a positive outcome, it is incumbent upon us to use AI responsibly. We must recognize that while AI possesses immense potential, it also demands careful guidance. Policymakers, technologists, and society as a whole should work together to establish robust frameworks and ethical guidelines governing the use of AI. By fostering collaboration and proactive discussions, we can steer AI’s evolution towards a future that benefits humanity as a whole.